Inspired by Stephen Hill’s post over on his self shadow blog, I wanted to put down some thoughts about LEAN mapping and CLEAN mapping for specular highlight filtering.

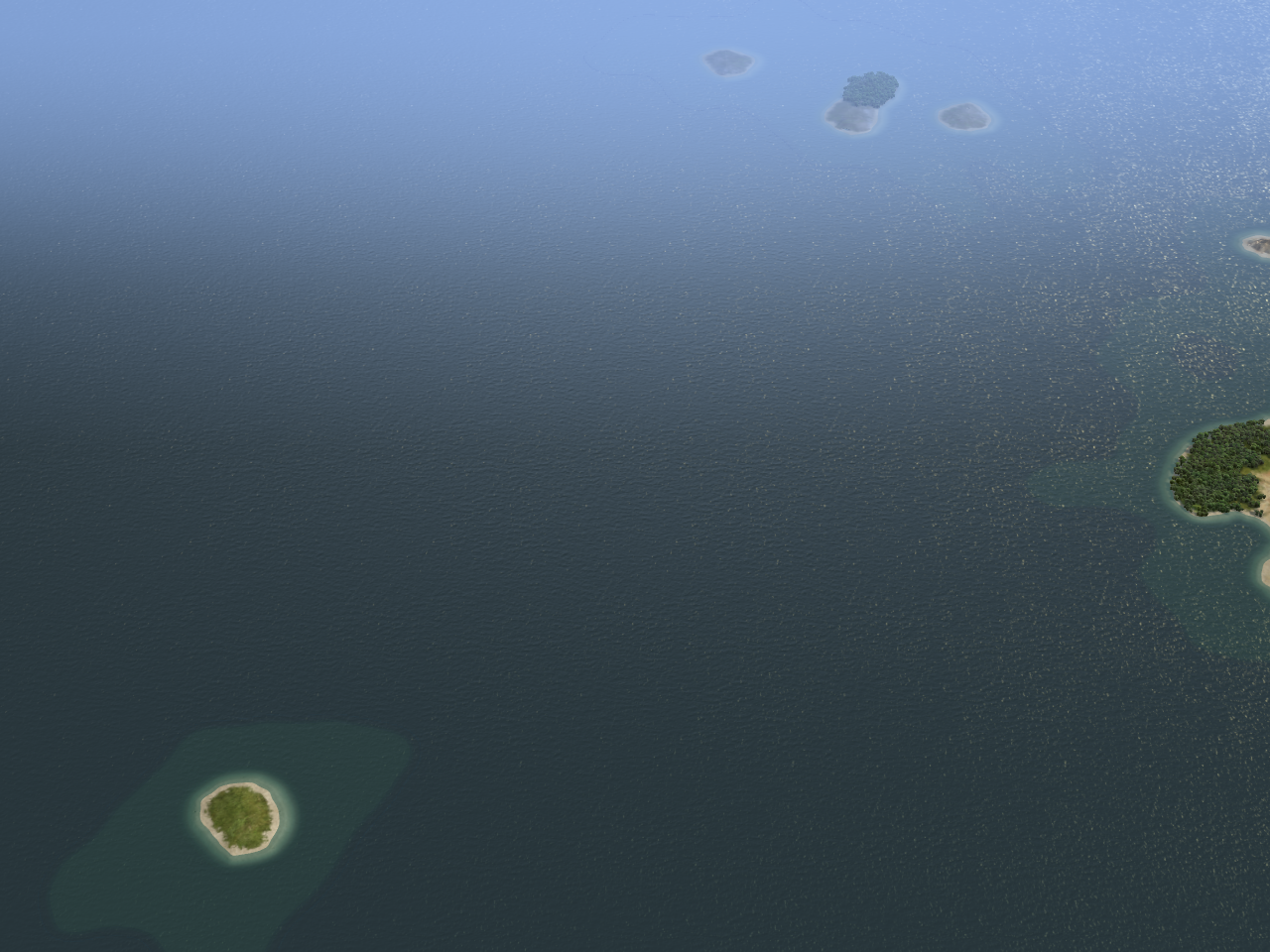

About a year and a half ago, Dan Baker and I published LEAN mapping, a method we developed for filtering normal maps to avoid aliasing for the water in Civilization V. A shiny bumpy surface should look less shiny once it is far enough away that you can’t see the individual bumps. At the Game Developer’s Conference this year, Dan presented a new lighter weight version we’re calling CLEAN mapping (Compact LEAN mapping, where LEAN mapping was Linear Efficient Antialiased Normal Mapping).

What is this LEAN mapping?

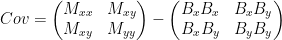

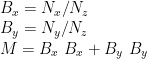

You can read the paper for the nitty-gritty details, but the gist of LEAN mapping is to models the bumps with off-center 2D Gaussian distributions of normal vectors in the surface tangent space. A 2D Gaussian has a center (mean) and elliptical shape (described by a 2×2 symmetric covariance matrix). You can stick the mean into a texture, and regular texture filtering does the right thing. The same is not true for the covariance, but you can compute the covariance from the raw second moment, and that does do the right thing when filtered. LEAN mapping needs to store at least five pieces of texture data, scaled to fit into the range of a texel. Two for the mean bump direction

and three for the raw second moments

At the top level of the MIP chain, these are initialized directly from the normal data. You apply your favorite MIP generation method for the rest of the MIP chain, and the difference between the way the B and M terms filter is what captures the conversion of bump directions into highlight shape. Given those five values in a couple of textures, we can reconstruct the main bump direction and shape of the distribution (= size and shape of the specular highlight). It’s simple, amazingly stable (we used specular powers over 13,000 with 16-bit textures), and has the cool bonus of turning grooved bumps into an anisotropic highlight shape, which happens in real life too.

To use it, you look up M and B from the texture and use them to reconstruct a covariance matrix for the distribution of normals. A few levels down,  won’t equal

won’t equal  anymore, and it’s this difference that matters.

anymore, and it’s this difference that matters.

The determinant of this matrix,  , might come out negative due to numerical error (more on that later). If it is, I just clamp the matrix to 0. I like to add the specular power into the covariance at render-time, though you could add it into

, might come out negative due to numerical error (more on that later). If it is, I just clamp the matrix to 0. I like to add the specular power into the covariance at render-time, though you could add it into  and

and  when creating the texture. Then the specular term is computed using a Beckmann distribution (basically a projected Gaussian distribution). Given Blinn-Phong specular power s, and normalized tangent-space light and view vectors Lt and Vt:

when creating the texture. Then the specular term is computed using a Beckmann distribution (basically a projected Gaussian distribution). Given Blinn-Phong specular power s, and normalized tangent-space light and view vectors Lt and Vt:

LEAN thoughts

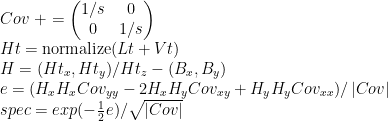

Any method has its drawbacks, and for basic LEAN mapping there are two. The first is the number of texture elements needed. Five values need two textures, which is often too many. If we give up the anisotropic highlight shape, we get CLEAN mapping. Now we just compute three texture elements at the top MIP level:

When you look these up with standard texture filtering, the difference between the way they’re filtered gives you a single variance rather than the 2×2 covariance matrix. You don’t get the highlight stretching from grooved bumps, but you do get the bump antialiasing that avoids bump sparkling and shimmering.

The second, thougher, problem is the numerical error alluded to above. The variance SHOULD always be positive, or covariance matrix SHOULD always end up with a positive determinant, but especially at the finest MIP levels, we’re subtracting pairs of very similar values. The specular term adds some padding to that, but if a 1-bit error in the normal is bigger than 1/s, there will be artifacts. In Civ 5, we used 16 bit texture, which gives a good amount of headroom. If you do it using 8-bit textures, you’ll have to limit the steepness of your bumps and/or maximum specular power to avoid problems. For example, if  and

and  are limited to -1 to 1, one bit in an 8-bit texture is 1/128, which limits the effective specular power to under 128. Compressed textures are out of the picture as the errors are just too big. So really, direct LEAN mapping is most useful if you have and can afford 16-bit textures.

are limited to -1 to 1, one bit in an 8-bit texture is 1/128, which limits the effective specular power to under 128. Compressed textures are out of the picture as the errors are just too big. So really, direct LEAN mapping is most useful if you have and can afford 16-bit textures.

16-bit textures are feasible for a PC game like Civ V, but for consoles, methods like directly storing the variance in a texture as suggested in Stephen Hill’s post are necessary avoid the numerical errors. Variance doesn’t filter linearly like the LEAN moments do, so you’ll see some texture filtering issues, but they’re better than the precision errors. Of course, you’ll need to build all of the MIP levels from a high-precision or floating point LEAN map source, or filter each level directly down from the base texture (so don’t just let the automatic MIP generation do it). Then, at least, the raw variances stored in the texture levels will be right, and the errors will be limited to the hardware texture filtering.

Edit: There are some problems with the error analysis in this post. See this follow up for a full (and better) analysis.