Doing my best impersonation of someone who blogs with more regularity than I really do…

I glossed over (flubbed?) the error analysis a little in my last post, and should really do a better job. I’ll look at CLEAN/LEAN mapping, but the analysis methods are useful in lots of situations where you compute something from a texture.

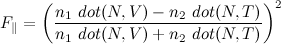

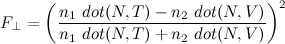

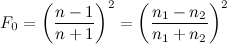

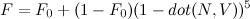

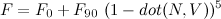

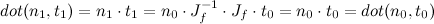

To keep things simple, I’ll use a simplified form of the (C)LEAN variance computation:

The error in this expression is especially important in (C)LEAN mapping since it determines the maximum specular power you can use, and how shiny your objects can be. For specular power s, 1/s has to be bigger than the maximum error in V, or you’ll get some ugly artifacts.

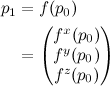

M and B come from a texture, so have inherent error of and

due to the texture precision. The error in each will be 1/2 of the texel precision. For example, with texel values from 0 to 255, a raw texel of 2 could represent a true value anywhere from 1.5 to 2.5, all of which are within .5 of the texel value.

In general, we’ll scale and bias to use as much of the texture range as we can. The final error for an 8-bit texture then is range/512. For data that ranges from 0 to 1, the range is 1 and the representation error is 1/512; while for data that ranges from -1 to 1, the range is 2, so the representation error is 2/512 = 1/256.

The error in each parameter propagates into the final result scaled by the partial derivative. is 1, so error due to M is simple:

The error due to B is a little more complicated, since is 2 B. We’re interested in the magnitude of the error (since we don’t even know if

was positive or negative to start with), and mostly interested in its largest possible value. That gives

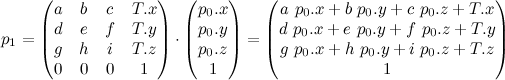

Generally, you’re interested in whichever of these errors is biggest. The actual error is dependent on the maximum value of B, and how big the texel precision ends up being after whatever scale is used to map M and B into the texture range. So, for a couple of options:

| B range | -1 to 1 | -2 to 2 | -1/2 to 1/2 |

| Max Bump Slope | 45° | 63.4° | 26.6° |

|---|---|---|---|

| 1/256 | 1/128 | 1/512 | |

| 2*1/256 = 1/128 |

2*4/128 = 1/32 |

2*.5/512 = 1/512 |

|

| M range | 0 to 1 | 0 to 4 | 0 to 1/4 |

| 1/512 | 1/128 | 1/2048 | |

| 1/128 | 1/32 | 1/512 | |

| 128 | 32 | 512 |

We can make this all a little simpler if we recognize that, at least with the simple range-mapping scheme used here, and

are also dependent on

.

So, this says the error changes with the square of the max normal-map slope, and that the precision of B is always the limiting factor. In fact, if there were an appropriate texture format, M could be stored with two fewer bits than B. For 16-bit textures, rather than 2-9 for the texture precision, you’ve got 2-17, giving a maximum safe specular power of 215=32768 for bumps clamped to a slope of 1. There’s no need for the slope limit to be a power of 2, so you could fit it directly to the data, though it’s often better to be able to communicate a firm rule of thumb to your artists (spec powers less than x) rather than some complex relationship (steeper normal maps can’t be as shiny according to some fancy formula — yeah, that’ll go over well).